How can journalists identifying fake photographs with so many dramatic images being shared at speed on social media.

Fact-checking journalist Deepak Adhikari, the editor of Nepal Check, has shared a piece he wrote about the spread of AI-generated image following an earthquake in Nepal in November 2023.

The article, below, explains how his organisation and others set about identifying the fake photographs.

Deepak hopes the methods he and his team used will be of use to other journalists trying to combat the spread of fake images on social media and in other news output.

How to detect AI-generated images

Following the devastating earthquake that struck Jajarkot district in Karnali Province in early November 2023, social media users shared AI-generated images claiming to show the devastation caused.

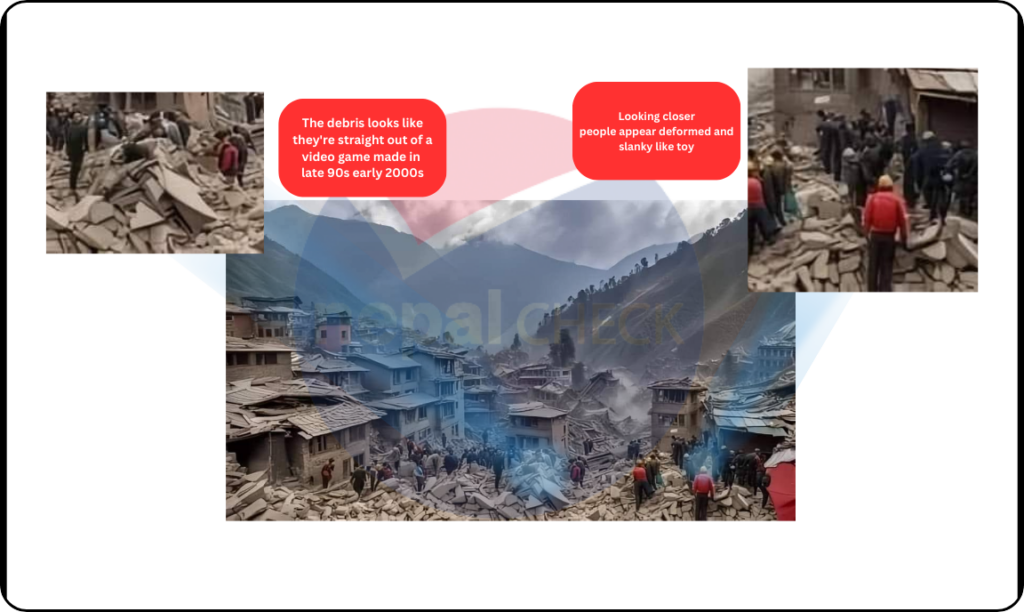

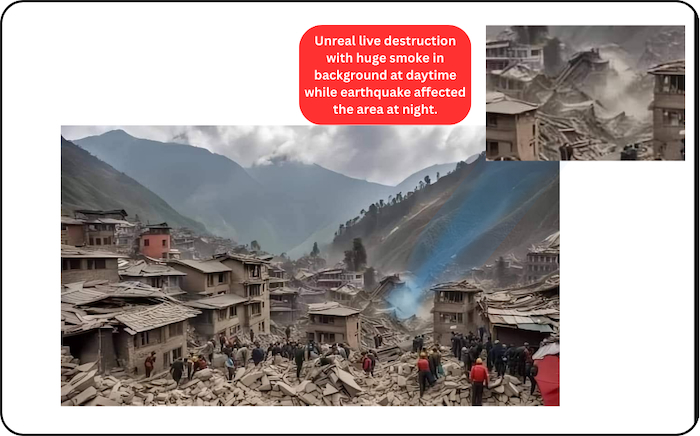

One photograph showed dozens of houses ruined by the earthquake with people and rescuers walking through the debris. The photo was initially shared by Meme Nepal. It was subsequently used by celebrities, politicians and humanitarian organisations keen to draw attention to the disaster in one of Nepal’s poorest regions.

The image was used by Anil Keshary Shah, a former CEO of Nabil Bank and Rabindra Mishra, a senior vice president of National Democratic Party. (See archived version here and here)

When Nepal Check contacted Meme Nepal in an attempt to find the original source of the photo, they replied that they had found the image on social media.

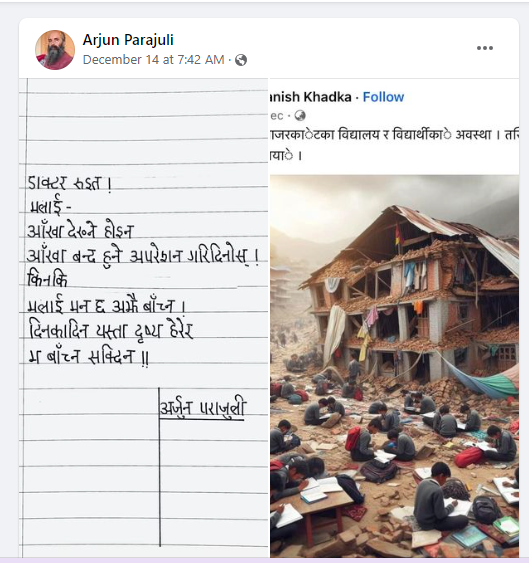

A month on, the AI-generated images supposedly showing the aftermath of the earthquake continued. On December 14, 2023, Arjun Parajuli, a Nepali poet and founder of Pathshala Nepal, posted a photo claiming to show students studying in the ruins of the earthquake at Jajarkot. Parajuli. The poet attached the photo to a poem, had reshared the image from Manish Khadka, who identifies himself as a journalist based in Musikot of Rukum district.

Both these viral and poignant images were fake. They were generated using text-to-image generator platforms such as Midjourney, DALLE.

In the digital age it’s easy to manipulate images. With the rise of AI-enabled platforms it’s possible to generate images online quickly and convincingly. AI-generated images have evolved from amusingly odd to realistic. This has created further challenges for fact-checkers who are already inundated with misleading or false information circulating on social media platforms.

Fact-checkers often rely on Google’s Reverse Image Search, a tried and tested tool used to detect an image’s veracity. But Google and other search engines only show photos that have been previously published online.

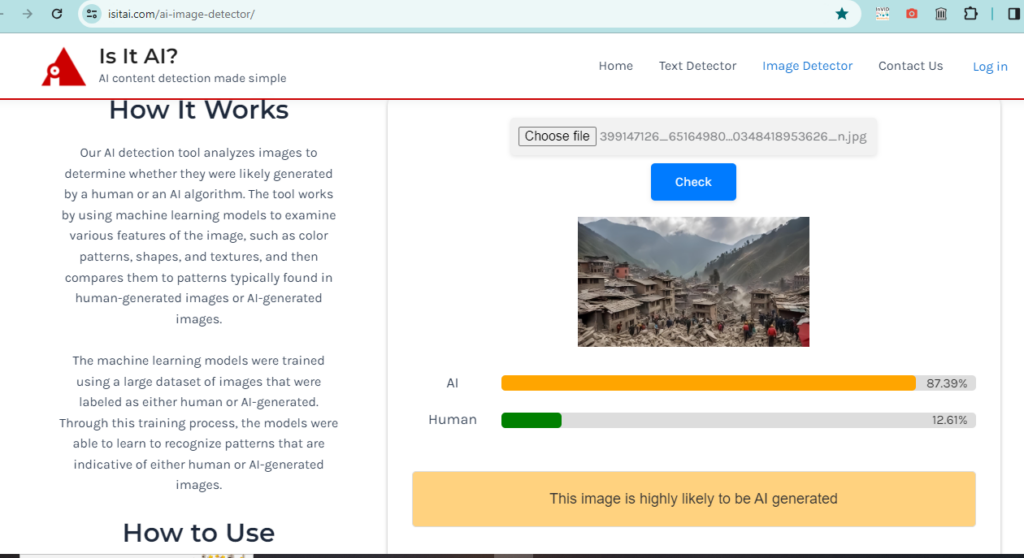

So, how can one ascertain if an image is AI-generated? Currently, there is no tool that can determine this with 100% accuracy.

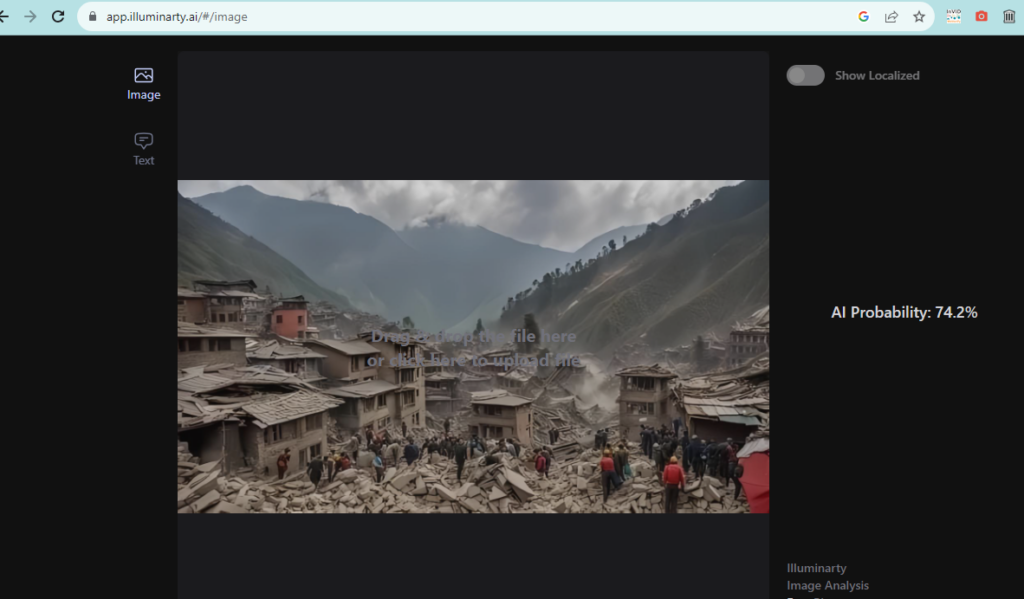

For example, Nepal Check used Illuminarty.ai and isitai.com to check the earthquake images to try to find out if they were generated using AI tools. After uploading an image to the platforms a percentage of how likely the image is to be generated by AI is shown.

Nepal Check contacted Kalim Ahmed, a former fact-checker at AltNews. He made the following observations about the image claiming to show devastation of the earthquake in Jajarkot.

- If you zoom in and take a closer look at the people they appear deformed and like toys.

The rocks/debris just at the centre look like they’re straight out of a video game made in the late 90s or early 2000s. - In the absence of a foolproof way to determine whether a photo is AI-generated, using observational skills and finding visual clues is the best way to tackle them.

A healthy dose of skepticism about what you see online (seeing is no longer believing), a search for the source of the content, whether there’s any evidence attached to the claim, and looking for context are powerful ways to separate fact from fiction online.

In a webinar in August this year organised by News Literacy Project, Dan Evon urged users to keep asking questions (is it authentic?). With the AI-images, their surfaces seem unusually smooth, which can be a giveaway, according to him. “Everything looks a little off,” he said.

Dan suggests looking for visual clues, adding that it was crucial to find out the provenance of the image. Experts caution that the virality of content on social media often stems from its ability to generate outrage or controversy, highlighting the need for careful consideration when encountering emotionally charged material.

In her comprehensive guide on detecting AI-generated images, Tamoa Calzadilla, a fellow at the Reynolds Journalism Institute in the US, encourages users to pay attention to hashtags that may indicate the use of AI in generating the content.

While AI has made significant progress in generating realistic images, it still faces challenges in accurately replicating human organs, such as eyes and hands. “That’s why it’s important to examine them closely: Do they have five fingers? Are all the contours clear? If they’re holding an object, are they doing so in a normal way?”, Tamoa writes in the guide.

Experts recommend that news media disclose information to readers and viewers regarding AI-generated images. Social media users are also advised to share the process publicly to mitigate the spread of misinformation.

Although the images purporting to depict the earthquake in Jajarkot lack a close-up view of the subjects, upon closer examination it becomes evident that they resemble drawings rather than real humans. Nepal Check also conducted a comparison between the viral AI-generated images and those disseminated by news media. We couldn’t find any such images that had been published on mainstream media in the aftermath of the earthquake.

Lesson plan for trainers

If you are a trainer of journalists we have a free lesson plan: Artificial intelligence and journalism which you are welcome to download and adapt for your own purposes.